Taurus HPC Cluster

Active Compute Nodes: Always check this list to see which compute nodes are available on the cluster. Compute nodes are constantly updated. Move your files in your shared folder before switching to a new compute node. (updated 2023-06-20)

- leo1.local.net to leo8.local.net: gcc, g++, OpenMP, MPI, CUDA 12.1, Python 3, MATLAB R2023a, xRDP

- taurus1.local.net to taurus8.local.net (newest): gcc, g++, OpenMP, MPI, CUDA 12.5, Python 3, MATLAB R2023a, xRDP

Important note: When following the instructions on this page, make sure that you replace the hostname taurus with the hostname of the compute nodes that you are using.

1. Introduction

This guide walks you through the steps of connecting to the Taurus High Performance Computing (HPC) Cluster, installing Visual Studio Code on your computer and compiling and running programs in C/C++, OpenMP, CUDA, MPI, MATLAB and Python. Before connecting to the cluster, you will need a username and password. Contact the system administrator, Dr. Vincent Roberge (vincent.roberge(at)rmc.ca), to get an account created for you. If you intend to use MATLAB on the cluster, you will also need an OpenVPN config file which will be supplied by the system administrator.

The Taurus HPC Cluster’s configuration is based on the Linux Containers (LXC) virtualization technology. The host Linux operating systems offer minimal services beside the LXC virtualization and all compute nodes are implemented in LXC containers. Containers run in unprivileged mode to allow direct access to the GPUs. A preconfigured set of containers have been deployed by the system administrator to allow users to program in C/C++, OpenMP, CUDA, MPI, MATLAB and Python. However, if your research project requires that you have your own container(s) in order for you to install your own software and libraries, this is possible. Discuss your requirements with the system administrator.

2. Cluster Specifications

The Taurus HPC Cluster is composed of eight Linux compute nodes (taurus1.local.net to taurus8.local.net) and one Linux client workstation (desktop-usb.local.net). All hosts are connected with a 10 Gbps low-latency converged Ethernet switch. The Linux nodes are Dell Precision servers equipped with 128 GB of RAM and dual Intel Xeon Silver 4214R CPUs with 12 hyper-threaded cores for a total of 24 cores or 48 virtual cores per node. All nodes are configured with an NVIDIA RTX4070 Ti graphics processing unit (GPU) with 7,680 cores and 12 GB DDR6 RAM supporting CUDA compute capability 8.9. The Linux nodes are configured with Linux Ubuntu 22.04 and MATLAB Parallel Server R2023a. The Taurus HPC Cluster provides a combined processing power of 1.23 TFLOPS on the CPUs and 119.2 TFLOPS on the GPUs.

3. Connecting to the Taurus HPC Cluster

There are six ways to connect to the Taurus HPC Cluster:

- Remote SSH connection

- Remote VPN connection

- Local Wifi connection

- Local GUI terminal connection with USB

- Remote desktop connection to Linux

Each method gives you different capabilities. The SSH and VPN connections allow you to connect to the cluster from anywhere on the internet. Wifi and Local terminal give you the best bandwidth and Remote desktop Connections allows you to easily run graphical applications.

SSH connection: SSH is the simplest of the six methods and allows you to program on the cluster using a Linux command prompt or Visual Studio Code. If you are programming in C, C++, CUDA or Python, SSH is all you should need. SSH uses a single port.

VPN connection: If you need to program in Matlab or if you need a remote desktop connection to a Linux server or a Windows server on the Taurus HPC Cluster, you will need to establish a VPN connection. A VPN connection gives you a private and secure tunnel to the Taurus subnet. Any traffic destined to the internet goes out on your local internet connection, but any traffic destined to the Taurus subnet is forwarded to the VPN tunnel.

Local Wifi network: If you are physically located in the Electrical and Computer Engineering (ECE) lab, the preferred method to connect to the cluster is using the local Taurus Wifi network. You can connect to these network using your personal computer. If you require a standalone computer for this purpose (i.e., a computer that is not configured to connect to the RMC domain), you can borrow a laptop from the ECE tech shop.

Local terminal connection: The last method to connect to the Taurus HPC Cluster is to use the client workstation that is physically located beside the cluster in the ECE Power Lab s4205. This computer is configured with Linux and joined to the Taurus domain. It is mostly used to copy files to and from the cluster using USB ports.

The following subsections describe in detail how to connect to the Taurus HPC cluster using each one of the six methods.

3.1. SSH Connection to the Cluster

To connect to the computer cluster, you need to access an SSH gateway:

- Host: tauruscluster.duckdns.org

- Port: 81

Once on the gateway, you can access the first node of the cluster also using SSH:

- Host: taurus1.local.net

- Post: 22

Note that you cannot open an SSH terminal directly on the SSH gateway server. The gateway is configured as an SSH jump host only. You do not need to worry about the details, simply follow the instructions below. These instructions are written for a Windows computer, but can easily be adjusted for Linux or Mac. We leave this as an exercise for the reader.

Important note: In this manual, commands that are to be run on Windows starts with“>”and commands that are to be run on the compute cluster starts with“$”. Do not include the“>”or“$”when typing your commands.

On your Windows computer, start a command prompt (not PowerShell but cmd.exe) and type:

> mkdir %USERPROFILE%\.ssh

> type nul > %USERPROFILE%\.ssh\config

> notepad %USERPROFILE%\.ssh\configEnter the following text and save the file. Make sure that you replace "username" with your actual username as assigned to you by the system administrator.

Host tauruscluster

HostName tauruscluster.duckdns.org

Port 81

User username

Host taurus1

HostName taurus1.local.net

ProxyJump tauruscluster

User usernameFrom the command prompt, you can now connect to the taurus1 server using the following command. It should prompt you for your password twice and then ask you to change your password.

> ssh taurus1Once you have logged successfully onto taurus1, it is recommended to change your password using the following command. You must use a complex password (more than 8 characters, with upper cases, lower cases and digit).

$ passwdYou can now log off.

$ exitFrom your Windows computer, generate an SSH public key that you will upload onto the taurus1 server. This will allow you to log onto the cluster without a password. This will save you quite a bit of time later on when programming in Visual Studio Code.

In the Windows command prompt, generate a RSA public key and copy the key to the gateway server:

> ssh-keygen

> type %USERPROFILE%\.ssh\id_rsa.pub | ssh taurus1 "cat > id_rsa.pub"Now, login to the server again and add the public key to your domain account:

> ssh taurus1

$ dos2unix id_rsa.pub

$ kinit

$ ipa user-mod username --sshpubkey="$(cat id_rsa.pub)"You can now log off and try logging into taurus1, it should not ask you for a password. Note that it may take a minute or two for your key to propagate to all the domain hosts. If the system prompts you for a password. Do not worry, simply try again later.

$ exit

> ssh taurus1If you move to a different computer and want to add multiple SSH keys to your account, upload all your keys to your home directory on taurus1 and then use the usermod command with multiple instances of the --sshpubkey option to add your multiple RSA keys all at once. All keys must be added at once.

Create a key on the first computer and upload it to the server. Note that the first key is named id_rsa1.pub when uploaded onto the taurus1 server:

> type %USERPROFILE%\.ssh\id_rsa.pub | ssh taurus1 "cat > id_rsa1.pub"Create a key on the second computer and upload it to the server. Now the key is named id_rsa2.pub:

> type %USERPROFILE%.ssh\id_rsa.pub | ssh taurus1 "cat > id_rsa2.pub"Login to the server and add your keys to your domain account:

> ssh taurus1

$ dos2unix id_rsa1.pub id_rsa2.pub

$ kinit

$ ipa user-mod username --sshpubkey="$(cat id_rsa1.pub)" --sshpubkey="$(cat id_rsa2.pub)"If needed, you can delete your SSH public keys from your domain account using:

$ kinit

$ ipa user-mod username --sshpubkey=3.2. VPN Connection

If you want to program in MATLAB or if you want to establish a remote desktop connection to a Linux or a Windows server on the cluster, you need to first establish a VPN connection. If you are using SSH, the VPN connection is not needed, but could be used. If you decide to do so, make sure that you configure your SSH client so that you do not go through the SSH gateway, but that you connect directly to your destination machine. Let’s continue with the VPN connection. First, you will need to install a VPN client on your computer. It is recommended to install the OpenVPN client.

Important note: This section can only be done on your personal computer at home or on the RMC wireless network as the RMC Enterprise Network does not open the destination port used by the VPN connection.

The OpenVPN client can be downloaded here. Once downloaded and installed, the client will ask you for a profile file. Get the profile file from the system administrator. Each user has a unique profile file that identifies you when you connect to the network, you cannot share your config file with other users. Loading the profile file is the only configuration needed to establish the network connection.

To confirm that you are connected to the Taurus HPC Cluster, open a Windows command prompt and type:

> nslookup taurus1.local.netYou should receive a 192.168.90.x address.

3.3. Local Wifi Network

If you are physically located at RMC, the recommended way to connect to the Taurus HPC Cluster is through the local Taurus Wifi network. Several Taurus Wifi access points have been installed throughout the ECE department. Search for the Wifi network named “Taurus”.

Important note: Before you connect to the network, you need to install a certificate on your computer. This will allow you to access the Wifi login page securely. You will have to complete these steps from a computer that is connected to the internet and then copy the certificate to the computer you will connect to the Taurus Wifi.

To install a certificate in Windows:

- Download the taurus certificate here.

- Right-click on the certificate file and select Open with > Crypto Shell Extension.

- Click on Install Certificate

- Select Local Machine and click Next

- Select Place all certificates in the following store and click on Browse

- Select Trusted Root Certificate Authorities and click OK. Click Next.

- Click Finish. Click OK to close the initial window.

- Restart your computer.

At this point, the Microsoft Edge browser will use your newly added certificate when browsing the web. However, if you use Firefox, you need to configure Firefox to also use this certificate.

- In Firefox, type

about:configin the address bar. - If prompted, accept any warnings.

- Search for

security.enterprise_roots.enabledand set it totrue. - Close and restart Firefox.

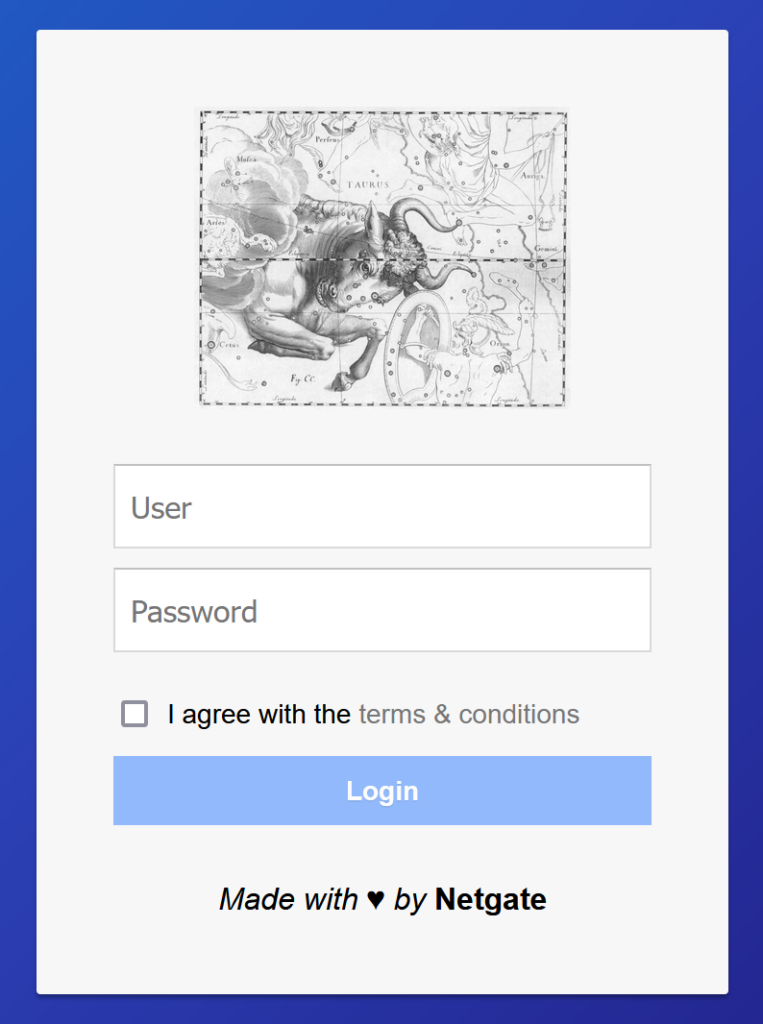

You are now ready to connect to the Taurus Wifi hotspot. Once connected, browse to any https website such as https://google.com. A warning saying “Connect to a Wifi hotspot” will appear. Click on Connect. You will automatically be redirected to the Taurus network captive portal welcome page as shown below. You need to accept the user agreement and enter your domain credentials. Note that until you enter your domain credentials, all network traffic from your computer will be blocked. Once you are logged in, you will get access to the Taurus network and the internet for a period of 1 day at which point you will need to re-authenticate.

Note: Internet access from the Taurus network is filtered to prevent accidental browsing to unprofessional websites. Also, all DNS requests are logged. If you find that a website is blocked wrongfully, please contact the system administrator.

3.4. Local GUI Terminal

There is one workstation that is physically co-located with the cluster to allow users to connect a USB external drive and transfer data to and from the cluster. This is useful to upload and download machine learning datasets as an example. The workstation is located in the ECE Power Lab s4205. You can log on using your Taurus HPC Cluster user account. The workstation is running Ubuntu Desktop and automatically mounts any FAT32, NTFS or Ext3/4 USB drives connected to it. You can then drag-n-drop your files to your user shared directory (discussed in the next section). The USB interface is 3.0 and allows for a fast transfer rate.

3.5. Remote Desktop Connection to Linux

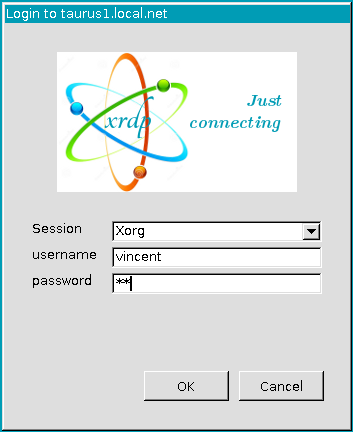

A remote desktop connection is only possible if you are connected to the taurus network through Wifi or VPN. This section discusses how to establish Remote Desktop Protocol (RDP) connection to a Linux host from a Windows client. In Windows, go to Start > Remote Desktop Connection. Type the hostname (ex.: taurus1.local.net) and your username. The following window may pop up. Make sure that you set the session to Xorg.

Once connected, you have access to a full desktop as in the figure below. You can start the MATLAB application from Application > Others > Matlab. You can drag-and-drop the MATLAB icon to the taskbar to create a quick launcher icon.

If you are using a large DPI monitor (e.g., a 4K monitor) and your remote desktop appears too small. Open a terminal and type the following command:

$ Screen2x.shIt will change the scaling and log you out so that the change is applied. Re-establish the RDP connection. Note that the remote computer uses the XFCE desktop environment and only allows you to use 1x and 2x scaling. To go back to 1x, run:

$ Screen1x.shYou can also copy and paste file using Ctrl-C and Ctrl-V between your local Windows computer and the remote taurus node. You can close the remote desktop windows by clicking on the "X" icon. This will not terminate any program in your remote session. You can then turn off your local computer and reconnect at a later time to continue where you left.

4. Shared Folder and Daily Backups

The Taurus HPC Cluster is configured with a shared directory accessible from every taurus node. When you log onto a taurus node, the shared directory is accessible from your home directory at /home/username/shared. It is recommended to use this folder for all your work. If you transfer data to and from the cluster using the Local GUI Terminal, make sure that you copy your files in your shared directory. The /home/username/shared directory is a symlink to /mnt/nfs_shared/username. A symlink is convenient and does not incurs any performance issues, however it does not play nicely with GDB when debugging code as GDB does not follow symlinks. For this, we have created a mount point to your shared folder located at /home/username/shared_mount. This uses bindfs and works nicely with GDB, however the performance is significantly degraded. Which one to use? Use both depending on what you are doing.

This shared folder backed up every night for 7 days, every week for 4 weeks and every month for 3 months. If you accidentally delete files, browse to your /home/username/backup

5. Installing Visual Studio Code

Visual Studio Code (VSCode) is a free Integrated Development Environment (IDE) that runs on several operating systems including Windows, Linux and Mac. It is highly configurable and allows you to develop code using Makefile and perform remote debugging without any lag. This makes it the perfect IDE to program on the Taurus cluster. VSCode is free, you can download it from the internet and install it on your computer. VSCode does not require admin privilege to install and installs by default in your user profile directory.

Once installed, start VSCode. On the left toolbar, click on the Extensions icon and install the following extensions:

- Nsight Visual Studio Code Edition

- C/C++ IntelliSense

- Remote Development

- Makefile Tools

- Shell Debugger

Once these extensions are installed, you can use VSCode to connect to taurus1. Click on the remote connection button on the bottom left corner of the VSCode window and select Connect to Host. Type taurus1.

It may ask you for the operating system of the remote host, select Linux. It may also ask you for your password a few times (this is if your SSH key has not propagated to the SSH gateway host yet), enter it each time. Once you are connected, click on Menu > Terminal > New Terminal. This gives you a bash terminal on taurus1. You can type id and pwd to confirm that you are connected as yourself and that you are in your home directory.

Important note: Once connected to a remote server, the VSCode will automatically install the VSCode server in your home directory on the remote server, this may take a few seconds. You will also need to reinstall the extensions listed above on VSCode once you are running on the remote server.

6. Running a C/C++ Program

This section covers how to compile, run and debug a C/C++ program on the Taurus HPC Cluster using VSCode. First, use the following command in the VSCode terminal to download the example code in your home directory:

$ cd ~

$ wget --user cluster --password computing https://roberge.segfaults.net/wordpress/files/cluster/prime_cpp.zipUnzip the start code:

$ unzip prime_cpp.zipGo to Menu > File > Open Folder and open the prime_cpp directory. Inspect the content of the prime.cpp file. This code computes the number of prime numbers between 2 and n. To compile and run the code, click on the debug icon on the left toolbar to open the debug window and then select Run debug from the drop-down menu in the left window. Then click on the green arrow just left of the drop-down menu. The start code should run successfully. You should see the output of the program in the TERMINAL window.

Important note: To run your code, always use the Run and Debug (Ctrl+Shift+D) button on the left toolbar, select the run configuration in the drop-down menu and click Start Debugging (F5). This allows you to use the Makefile and the build and launch tasks that have been manually programmed in thetasks.jsonandlaunch.jsonfiles (keep reading to learn about these two files).

Tip: If you use the Debug or run button on the right-hand side of VSCode, you will be using some default task and launch parameters and the project will fail. To avoid this mistake, right-click on the Debug or run button on the right-hand side of VSCode and disable its visibility.

This project has been configured to be compiled using a Makefile. Inspect the content of the source file and the Makefile. The Makefile is a bit complex and is very complete. It can be used as a starting point when creating your own C/C++ projects. Now, inspect the content of the .vscode directory which is used by VSCode to configure the project.

The c_cpp_properties.json file is used by the VSCode to perform the syntax highlighting and to allow you to see function definition and perform auto-completion. It is not used during the compilation. If the c_cpp_properties.json is not configured correctly, you may see errors highlighted in your code, but your code still compiles correctly. These errors are false positive due to the misconfiguration.

The launch.json file configures the launch option for your program. In this example, there is the “Run debug” and “Run release” launch configuration. For both configurations, you can see the path to the binary being executed. You can also see the arguments used. Note that the launch configurations were configured with a pre-launch task which compiles the program before it is run.

The tasks.json file configures the compilation tasks. Here, it calls the Makefile with the appropriate target.

To debug your program, add breakpoints by clicking to the left of the line number in the .cpp source file and compile and run your program in debug mode. When the program stops at a breakpoint, you can see the content of variables on the left window. To see the content of arrays, add an entry such as the following in the watch window:

(double[5]) *inputThis entry would allow you to see the first 5 values of the array input. By the way, there is no array in this C++ example, but knowing this trick will prove invaluable when writing your own code in VSCode. To print the value of a single element of an array, you can type something like the following in the DEBUG CONSOLE tab:

input[5]This entry would allow you to see the 6th element of array input. Remember that C/C++ is zero indexed.

Another useful trick, VSCode has an auto-format feature and fixes the indentation of your code. To use it, simply type:

- On Windows: Shift + Alt + F

- On Mac: Shift + Option + F

- On Ubuntu: Ctrl + Shift + I

Once your code is working in debug mode, compile it and run it in release mode. This will ensure that it works correctly in release mode. However, to measure accurate runtimes, you must run the code without the GDB debugger attached. For this, go to the Terminal tab and call the program directly. In this case, call:

$ release/prime7. Running an OpenMP Program (multicore CPU)

Now that you have run a sequential program, let’s use OpenMP to take advantage of the multicore processor installed in the Taurus HPC Cluster. Download the example code :

$ cd ~

$ wget --user cluster --password computing https://roberge.segfaults.net/wordpress/files/cluster/prime_omp.zipUnzip the start code:

$ unzip prime_omp.zipGo to Menu > File > Open Folder and open the prime_omp directory. Inspect the content of the prime.cpp file. This code also computes the number of prime numbers between 2 and n, but now uses multiple threads. You can inspect the Makefile to see the options used to compile the program.

Adjust the number of threads and run the program. Run it outside of the debugger to get the real runtime of the program. As an example, the following command will run the program with 4 to 12 threads with increments of 4.

$ make release

$ release/prime_omp 4 4 12Inspect the launch.json file in the .vscode directory to see how VSCode can be configured to pass arguments to the program

8. Running a CUDA Program (GPU)

The GPU contains a very large number of cores when compared to multicore CPUs, but each core is much simpler in design. GPUs are optimized for massively parallel programs that exploit data-level parallelism. To test the GPU installed on the Taurus HPC Cluster, download the CUDA example program using the following commands:

$ cd ~

$ wget --user cluster --password computing https://roberge.segfaults.net/wordpress/files/cluster/prime_cuda.zipUnzip the start code:

$ unzip prime_cuda.zipGo to Menu > File > Open Folder and open the prime_cuda directory. Inspect the content of the prime.cu file, the Makefile and the files in the .vscode directory. When you are ready, compile and run the example program using the launch button. You should note a much higher speedup compared to the OpenMP program.

9. Running an MPI Program (Distributed and Multicore)

For highly complex tasks, it is sometimes necessary to use the computing power of multiple computers connected together in a cluster. This can be achieved using a high-performance multi-process library for distributed systems such as Message Passing Interface (MPI). MPI programs must run from a directory that is shared between all the nodes in the cluster. For this reason, change directory to ~/shared and download the MPI example program there:

$ cd ~/shared

$ wget --user cluster --password computing https://roberge.segfaults.net/wordpress/files/cluster/prime_mpi.zipUnzip the start code:

$ unzip prime_mpi.zipGo to Menu > File > Open Folder and open the prime_mpi directory. Inspect the content of the various files in the directory.

Your MPI program will run on 4 nodes (taurus1 to taurus4) and will make use of all the cores on the CPUs. Before you run the program, you must acquire a Kerberos ticket from the domain controller which will allow you to access the other nodes without a password. You must also log in to the other 3 taurus nodes so that a soft link to your shared directory gets created in your home directory. The nodes have been configured so that the soft link gets created the first time you open a Bash shell on the node. To do this, run the following commands on taurus1. It can be done right from the terminal window of VSCode.

$ ssh $USER@taurus2.local.net

$ exit

$ ssh $USER@taurus3.local.net

$ exit

$ ssh $USER@taurus4.local.net

$ exitThis previous step only needs to be done the first time you use the Taurus HPC Cluster. However, at the beginning of every MPI programming session, you will need to enter the command below to reacquire your Kerberos ticket and to remount the shared drive on all taurus nodes:

$ make initYou are now ready to compile and run the MPI example program. Use the launch button and select mpirun release. Mpirun is the application that launches the multiple processes on the cluster computers.

Debugging an MPI program is a bit tricky because mpirun is the first process to run which in turn starts several instances of your program. If you try debugging your program in the standard way, the debugger will start debugging mpirun and not your application. This will fail catastrophically. To debug your MPI program, compile your program in DEBUG mode using the Makefile. Note that there is a line of code at the beginning of the main() function that is included only in debug mode:

#ifndef NDEBUG

wait_for_debugger(world_rank, 0);

#endifThis line of code makes process 0 wait for the debugger to attach to it. Once the program is compiled in debug mode, insert at least one breakpoint in the source code and use the command prompt to launch the program:

$ make run-debugProcess 0 will spin lock until the debugger attaches to it. Now, from VSCode, use the launch button and select attach debug. You will be prompted to select the PID of the process to attach to. Typically, process 0 of your program will be the process named prime_mpi with the lowest PID. Once attached, process 0 will start running automatically and should stop at your first breakpoint. You can now use the debugger normally.

10. Running MATLAB on a Single Cluster Node (Multicore and GPU)

This section explains how to open a remote session on a taurus host and run MATLAB locally on the taurus host. When using this method, your code and project files reside on the taurus host. Only the GUI is sent over the network. This method allows you to start MATLAB on a taurus host, start a long-running job, disconnect and come back at a later time to check the progress of your job. It is also very useful if you have a MATLAB program that uses or generate large files.

There are two ways of opening a remote session on a taurus node. The first method is the preferred one and consists of using the Remote Desktop Protocol (RDP). See the details in sections 3.5 and 3.6. Make sure that you establish a VPN connection before making the RDP connection. With an RDP connection, you have access to a full desktop environment.

The second method to establishing a remote connection is to forward the X11 window display over SSH. This way, MATLAB runs on the remote computer, but the MATLAB GUI is displayed to your local computer. This method does not give you a full desktop environment, it only forwards the MATLAB GUI to your local computer. You do not need a VPN connection for this method.

10.1. Option 1: Remote Desktop Protocol (RDP) (preferred method)

To initiate a remote desktop connection to a taurus host, you must first establish a VPN connection to the cluster (section 3.2). Then, follow the instructions in sections 3.5 and 3.6.

Once you are in your remote session, you can start MATLAB from the start menu. If you find that the scaling of the MATLAB GUI is not good, you can also adjust it. This time, you can use fractional scaling. From within MATLAB, type the following command to use a scaling of 2x as an example. You must restart MATLAB for the changes to apply.

>> s = settings; s.matlab.desktop.DisplayScaleFactor

>> s.matlab.desktop.DisplayScaleFactor.PersonalValue = 2

>> exitNow that MATLAB is running, you can write code, start a script and so on. You can also copy and paste file using Ctrl-C and Ctrl-V between your local Windows computer and the remote taurus node. You can close the remote desktop windows by clicking on the "X" icon. This will not terminate MATLAB nor your remote sessions. You can then turn off your local computer and reconnect at a later time to see the result of your program.

10.2. Option 2: Using X11 forwarding

This method does not require a VPN connection, but only an SSH connection. If you are using a Windows computer, you will need to use the MobaXterm remote terminal application. This application is similar to Putty, but allows you to forward the X11 GUI applications. Download the free version of MobaXterm here. You can use the portable or installable versions.

Start MobaXterm and perform some initial configurations:

- on the main window, go to Menu > Settings > Configuration.

- On the X11 tab, set the DPI drop-down menu to auto and click OK.

- Back to the main window, click on the Session icon.

- Click on SSH and enter taurus1.local.net and your username and port 22.

- Go to the Advanced SSH settings tabs.

- Enable X11 forwarding, compression

- If you want to connect without a password, click on the private key button and browse to the SSH key you generated earlier, most likely C:\Users\.ssh\id_rsa.

- Go to the Network settings tab and click on the SSH gateway (jumphost) button.

- Click Okay, it will warn you that it is the first time you connect to this server and ask you if you want to accept to trust the identify of the server, click Accept.

- You should now be logged into the taurus node.

- In the future, you can log into the taurus node simply by right-clicking on the session icon in the left window and selecting Execute.

You can now start MATLAB. You have three options:

1. To start MATLAB with full GUI support use:

$ matlab2. To start MATLAB with the command prompt only, but to be able to plot figures, use:

$ matlab -nodesktop -nosplash3. To start MATLAB with the command prompt only and no graphic capabilities at all, use:

$ matlab -nodisplay -nojvmIf you started MATLAB with full GUI support on a large DPI monitor (e.g., a 4K monitor) and the GUI is too small. Type the following command in the MATLAB command prompt to scale your display. Change the scaling factor (here set to 2.0) to your liking. You must restart MATLAB for the changes to take effect.

>> s = settings; s.matlab.desktop.DisplayScaleFactor

>> s.matlab.desktop.DisplayScaleFactor.PersonalValue = 2

>> exitTo test MATLAB and plot a figure, run the following command:

x = 0:pi/100:2*pi; y = sin(x); plot(x,y);Notes for Linux Users: This setup can be done from a Linux computer simply by initiating an SSH connection with the -XC options to forward the X11 GUI server and compress the communication for higher bandwidth. If you are running Linux Ubuntu, you will have to edit the login manager configuration file to switch from the Wayland window system protocol to X11 using the following commands:

$ echo $XDG_SESSION_TYPE

$ sudo nano /etc/gdm3/custom.conf

WaylandEnable=false

$ sudo reboot10.3. Error Regarding Insufficient Memory

If when running your MATLAB code you get an error regarding insufficient memory, this is caused by the default amount of RAM allocated to the Java Heap not being enough. There are two ways to fix this.

- Method 1: Before you start MATLAB, edit the MATLAB configuration file to increase the amount of memory allocated for the Java Heap using the following command to increase the heap size to 4 GB:

$ nano ~/.matlab/R2023a/matlab.prf

JavaMemHeapMax=I4096- Method 2: Once MATLAB is started, navigate to Home tab > Preferences > General > Java Heap Memory and set memory to 4,096 MB or 8,192 MB as required.

11. Running MATLAB on Multiple Cluster Nodes (Distributed, Multicore and GPU)

11.1. Prerequisites

The compute server is configured with MATLAB R2023a. You must use this exact version on your personal computer. If you do not have this version, you must install it using your RMC email account. This may require you to create a MathWorks account. When installing MATLAB, make sure that you install the MATLAB Parallel Toolbox.

When connecting a MATLAB client to the MATLAB Parallel Server, several ports are used for the communication. For this reason, before you can run a job on the cluster, you must establish a VPN connection to the Taurus HPC Cluster subnet using the OpenVPN client. Refer to section 3.2 to establish the VPN connection.

11.2. Starting a MATLAB Job Scheduler

The MATLAB Job Scheduler requires that you have a secret file present on this computer. This secret file is used to encrypt the communication between your computer and the compute cluster. The secret file must match the one present on the compute cluster. You can download the secret file here. Once downloaded, copy it to C:\Program Files\MATLAB\R2023a\toolbox\parallel\bin\.

The next step is to start a MATLAB Job Scheduler and a group of worker processes. To do this, start the following executable (you can create a shortcut on your desktop) on your Windows computer:

C:\Program Files\MATLAB\R2023a\toolbox\parallel\bin\admincenter.batIf the <matlab>\toolbox\parallel directory does not exist, you did not install the Matlab Parallel Toolbox on your computer, reinstall it.

Once the Admin Center is started, click on File > Change Shared Secret File and browse to your secret file. You are now ready to use the Admin Center.

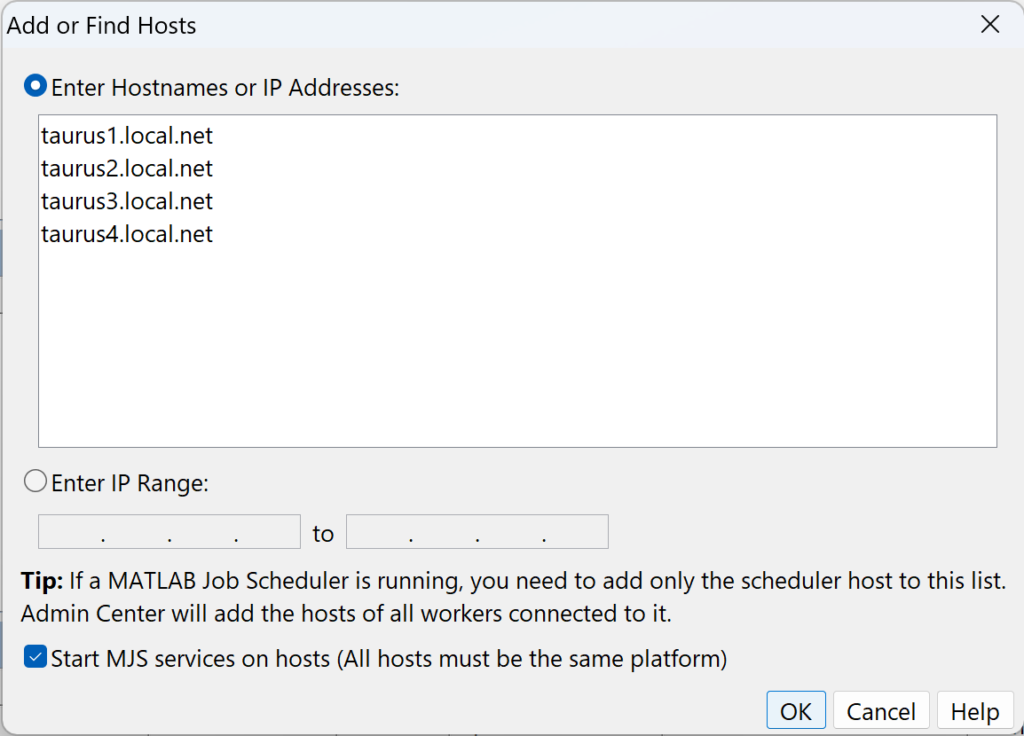

Click on Add or Find host and add the taurus hosts (compute nodes):

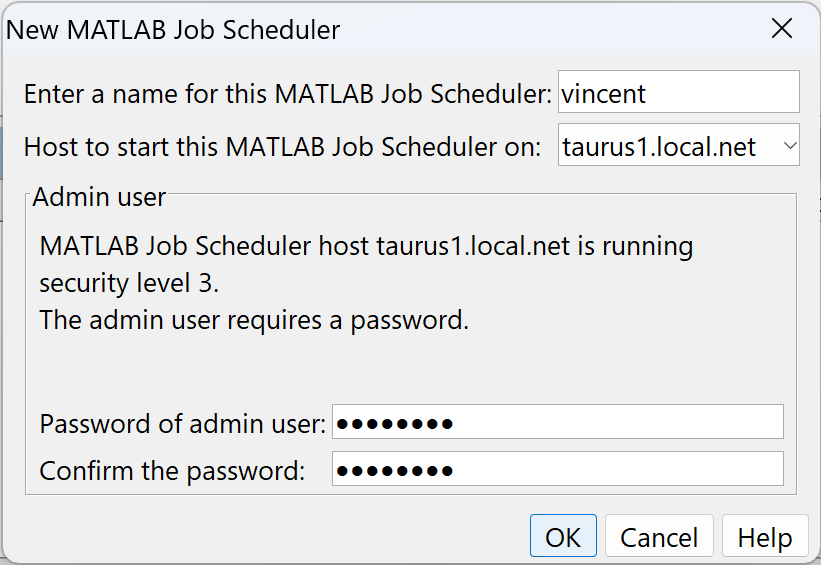

Once added, in the MATLAB Job Scheduler window, click on Start to create a job manager on taurus1.local.net. Name your scheduler with your username. This will allow other users to identify your scheduler as yours. You can use any password as the admin user password. This password is only valid for the duration of the scheduler. You should stop your scheduler when you are done using the compute cluster.

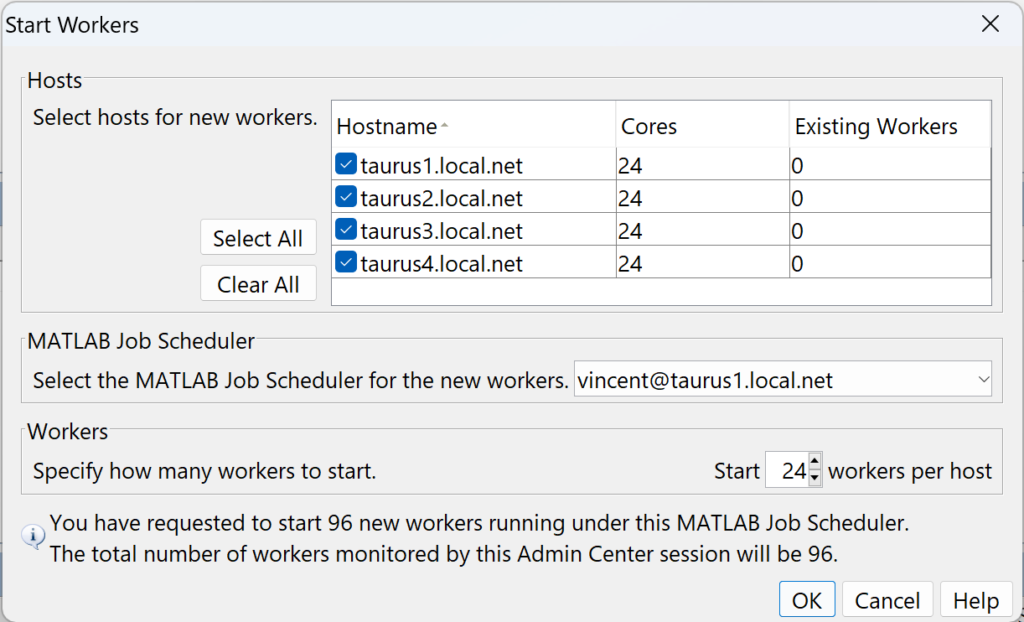

Now that the scheduler is started, in the Workers window, click on Start to create workers. Since each host has 24 cores, it is recommended to create a maximum of 24 workers per host for a total of 96 workers. Creating 96 workers does take time, please be patient. You only need to create them at the beginning of your work day and destroy them at the end of the day or when you are done working on your project.

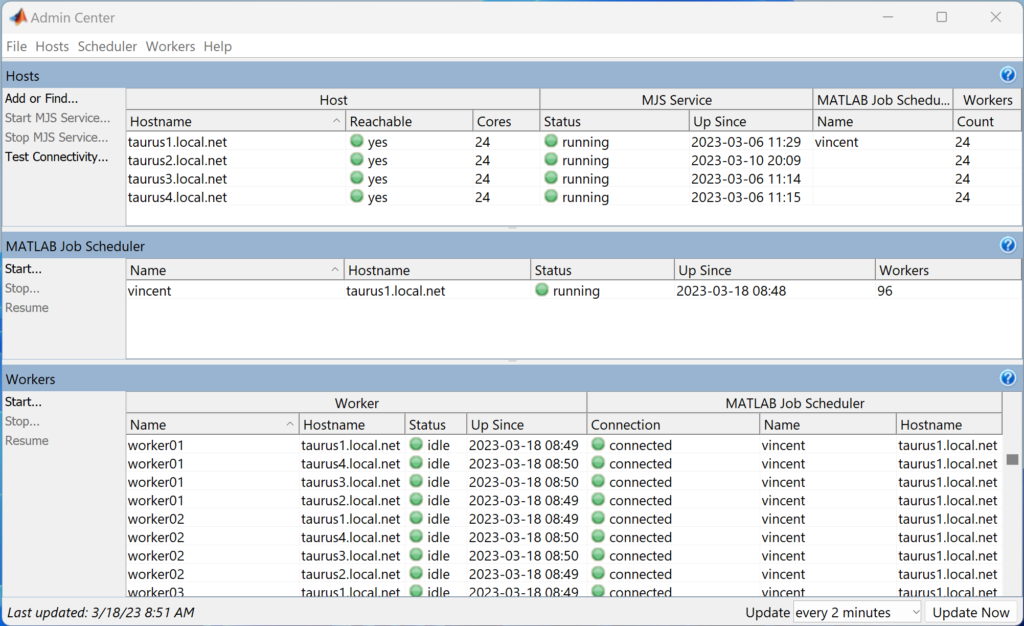

Once your workers are started, your Admin Center window should look like this:

You can now minimize Admin Center and start the normal MATLAB program.

11.3. Connecting to MATLAB Workers

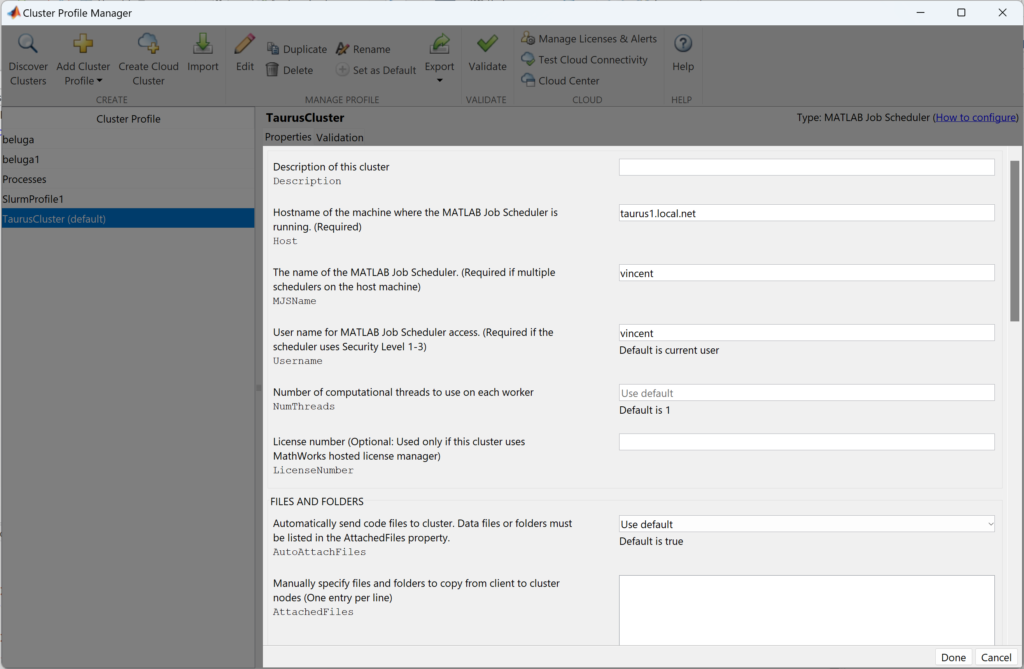

In MATLAB, on the Home tab, go to Parallel > Create and Manage Clusters. Click on the Add Cluster Profile icon and select Matlab Job Scheduler. This should create a profile named “MJSProfile1”. Right-click on the profile name and rename the profile to “TaurusCluster”. With “TaurusCluster” selected, click on the Edit icon and configure your cluster as follows:

- Description: Taurus Cluster

- Host name: taurus1.local.net

- Matlab Job Scheduler: vincent (this should be your username is you followed the instruction)

- Username for Matlab job scheduler access: vincent (this is your username)

Leave the rest as default.

Back to the MATLAB main window, on the Home tab, select Parallel > Select Parallel Environment > TaurusCluster.

11.4. Running Parallel Code

Move to a suitable working directory and run the following MATLAB command in the MATLAB command window to download the example code:

urlwrite('https://roberge.segfaults.net/wordpress/files/cluster/prime_matlab.m','prime_matlab.m','Username','cluster','Password','computing');Open the prime_matlab.m file and run the code. MATLAB will prompt you for a username and password, enter you Taurus HPC Cluster credentials (the one used to SSH onto the Taurus HPC Cluster). Use this MATLAB documentation to help you write your own parallel code.

11.5. Terminating a MATLAB Session

Once you are done using MATLAB on the Taurus HPC Cluster, go in Admin Center and destroy all your workers AND THEN destroy the job scheduler. The workers must be destroyed before the scheduler. The order is very important. To destroy your workers, simply right-click on your workers and select Destroy. You can use Ctrl-a to select all your workers at once. Do the same to destroy your scheduler.

Important note: Forgetting to destroy your scheduler and worker will create unnecessary load on the cluster and will clutter the Admin Center console of other users. Make sure that you destroy your workers and your scheduler in the correct order. The workers must be destroyed first and the scheduler must be destroyed last.

12. Conclusion

Thank you for using the Taurus HPC Cluster, if you have comments or requests, do not hesitate to contact the system administrator. If you would like to have your own Linux container (LXC) on the cluster so that you can install personalized packages (ex.: python with different machine learning or artificial intelligence libraries), this is possible, discuss it with the system administrator.